AI Field Day 6: MemVerge on Supercharging AI Infrastructure

Jay Cuthrell

Chief Product Officer

Hi, I’m Jay Cuthrell and I’m a delegate for Tech Field Day this week for AI Field Day 6 in Silicon Valley on January 29–January 30, 2025. I’m sharing my live blogging notes here and will revisit with additional edits, so bookmark this blog post and subscribe to the NexusTek newsletter for future insights.

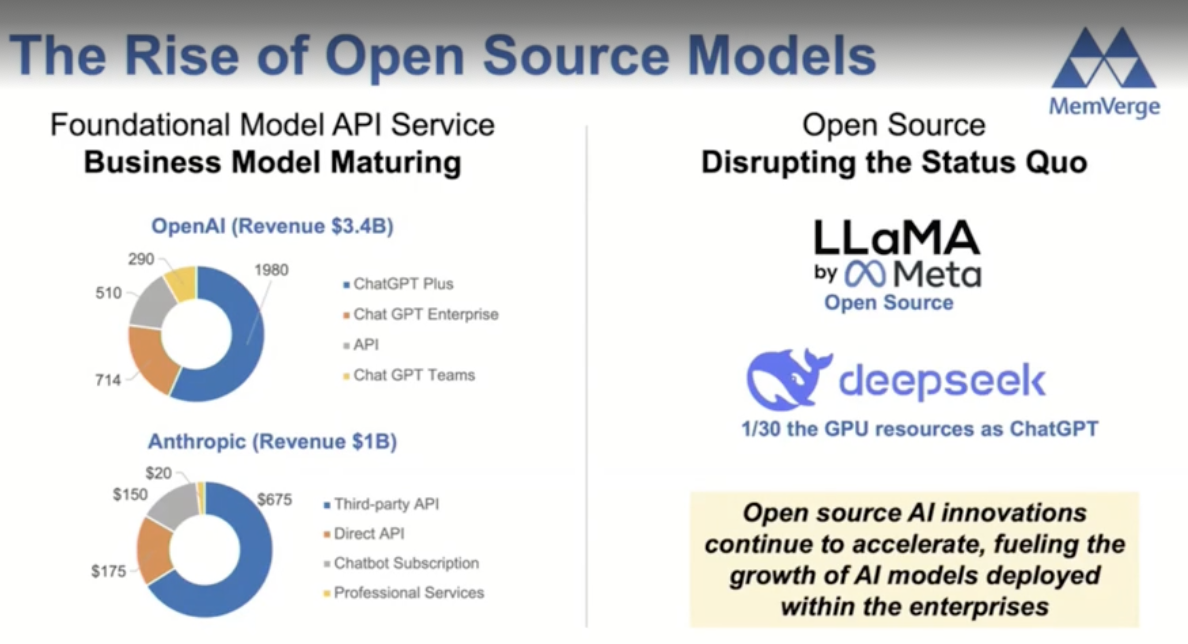

Charles Fan is the CEO and Co-Founder of MemVerge shared their vision and made the case for improving the Enterprise AI infrastructure stack — especially as the rising adoption curve for API & Open Source makes bigger impact while the business model matures and disruption of the status quo.

MemVerge Rise of Open Source Models

Arguably, there is the API economy of the hyperscalers unlocking innovations at a staggering pace. However, there is also the Enterprise proprietary data that wishes to leverage API without compromising concerns for privacy and IP protection while taking advantage of Open Source — within their AI Data Center.

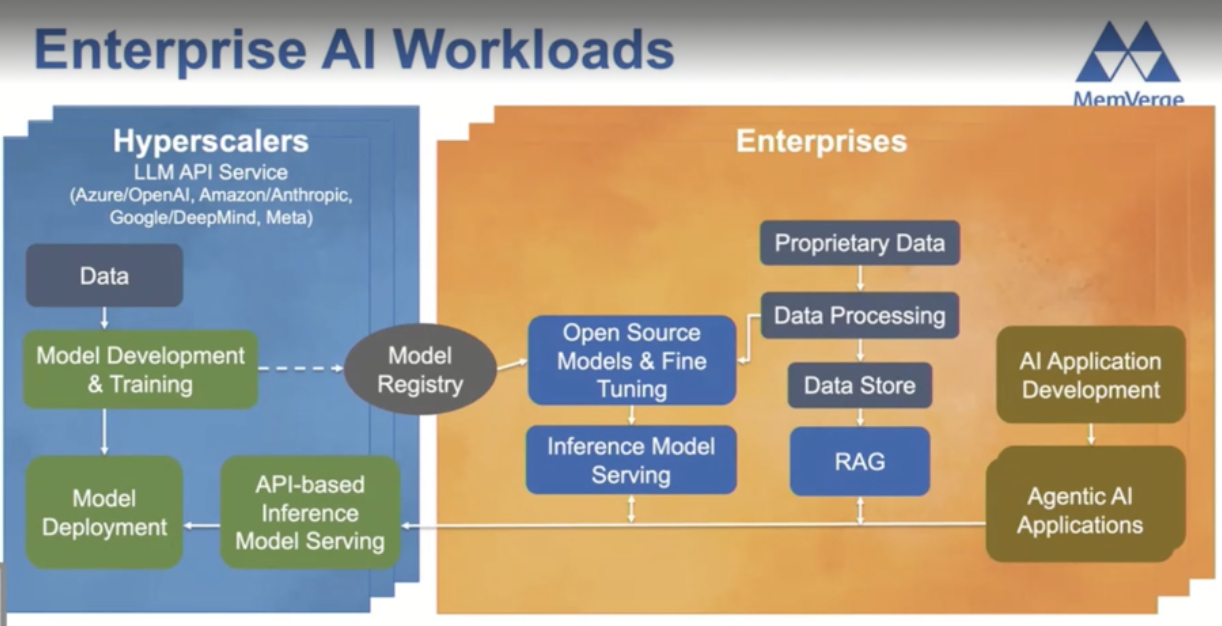

MemVerge view of Hyperscalers and Enterprise

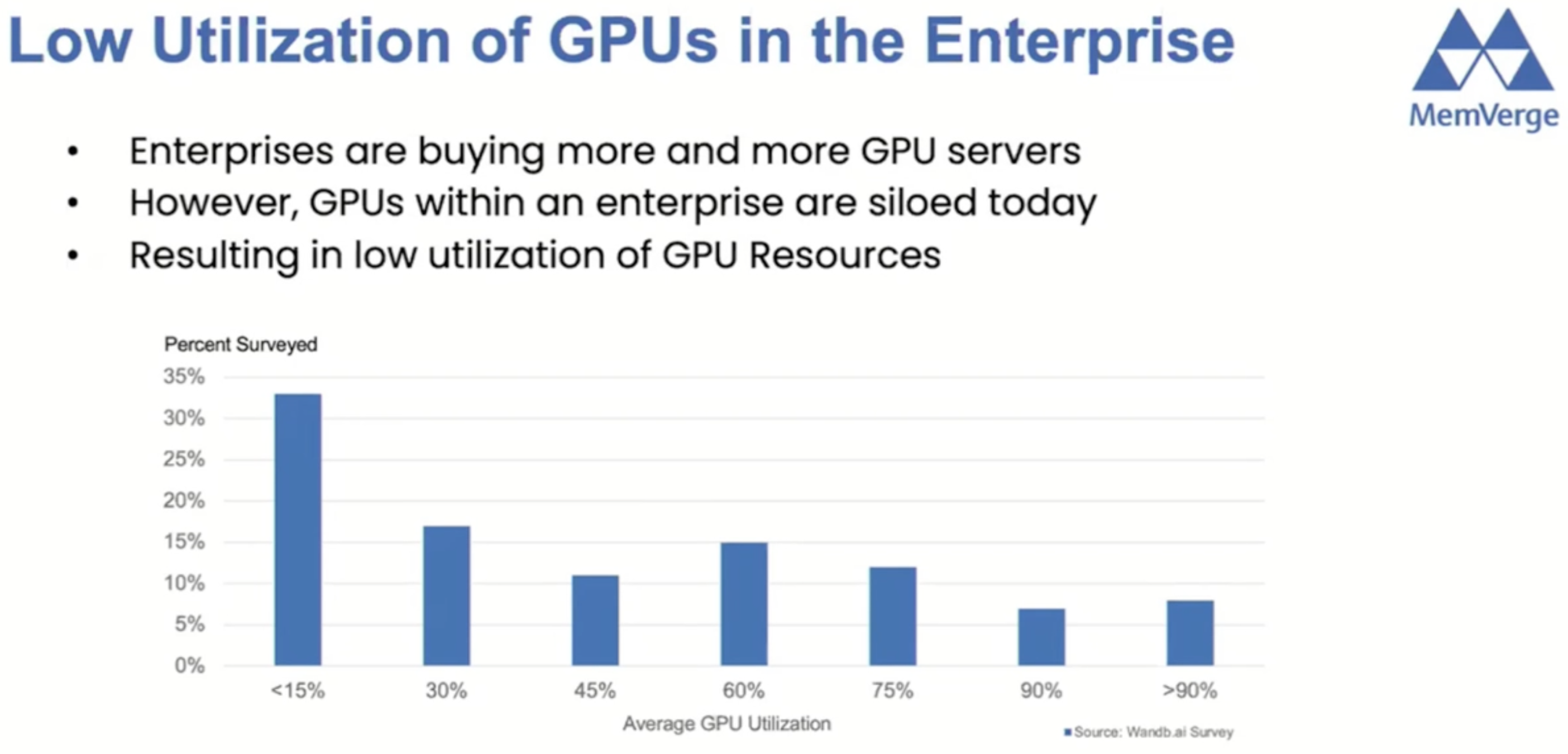

Today, the technology concept of workload placement in the public cloud and/or private cloud is better understood as cloud is better described as an operating model not a location. However, the business lens decision for best placement of AI workloads between these deployment locations is still a problem space that requires a better resource scheduler to optimize across fine-tuning, inference, and GPU-as-a-Service (GPUaaS) needs within the Enterprise.

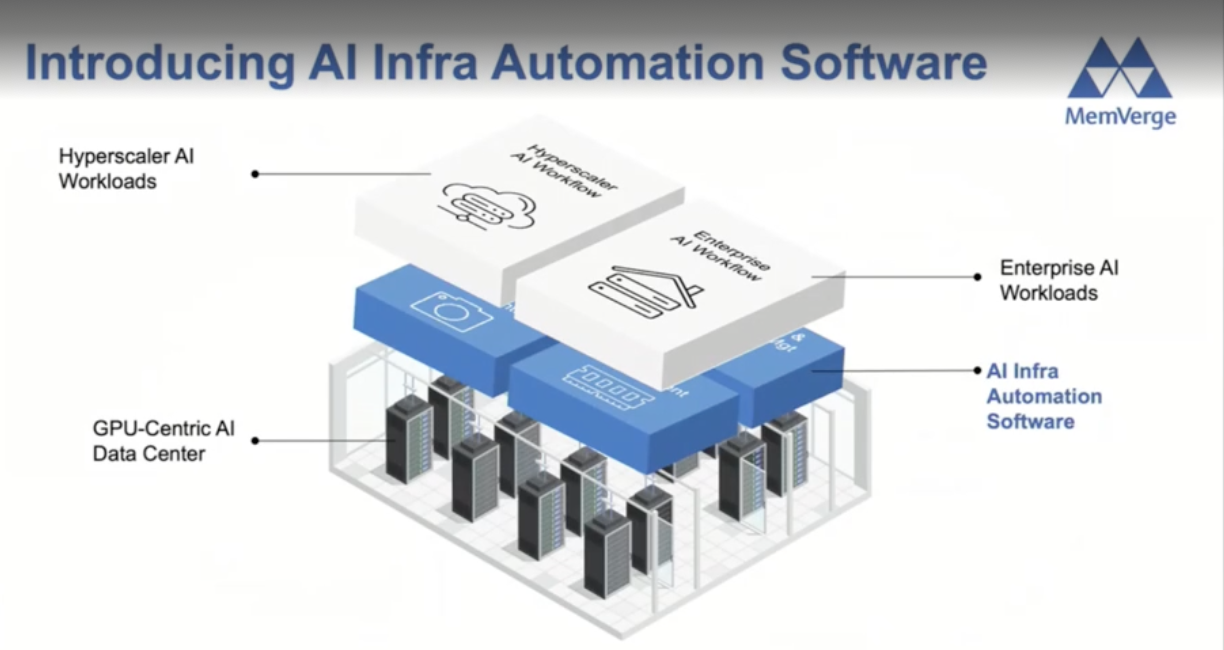

MemVerge AI Infrastructure Automation Software

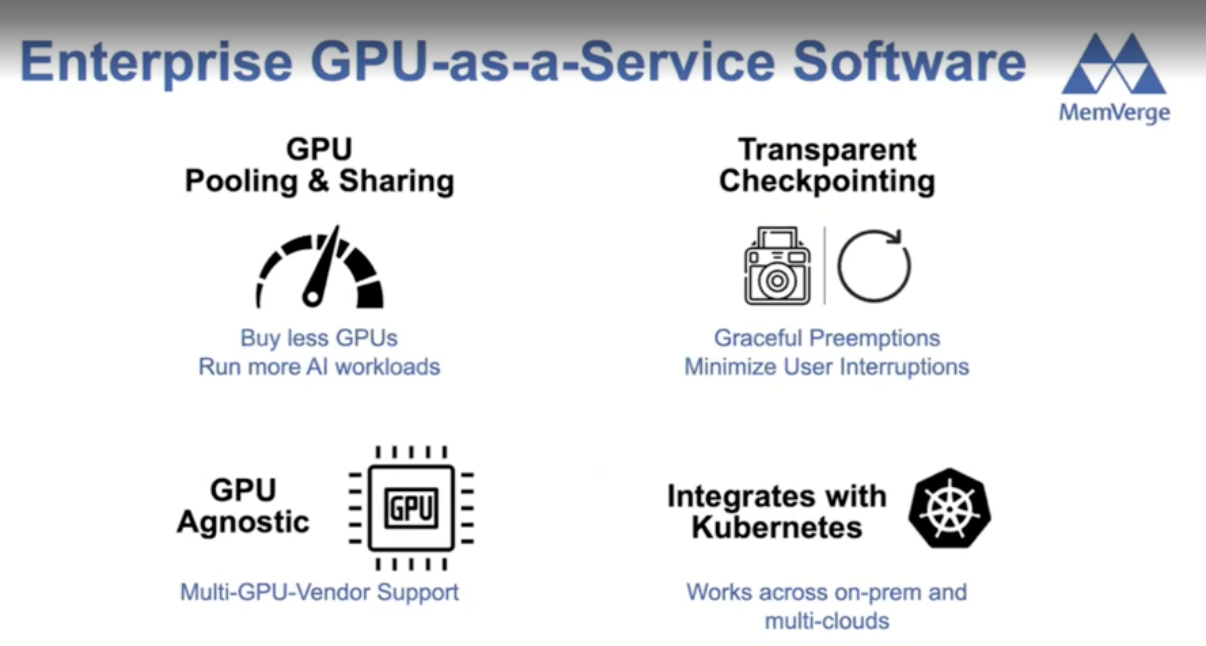

The MemVerge story is a path to simplifying the path for smarter scheduling of valuable GPU enabled resources that takes into account memory partitioning for fractional GPU with graceful preemption vs. different GPU virtualization alternatives.

Weights & Biases: Monitor & Improve GPU Usage for Model Training

MemVerge Enterprise GPU-as-a-Service Software

For example, if your company is investing in a 512 node GPU cluster, having a GPUaaS ready environment is more than simply procuring the GPUs and servers. To be successful, a software stack needs to enable consumption behaviors that provide the widest possible audience within the Enterprise to maximize utilization of these hardware assets.

MemVerge Memory Machine AI

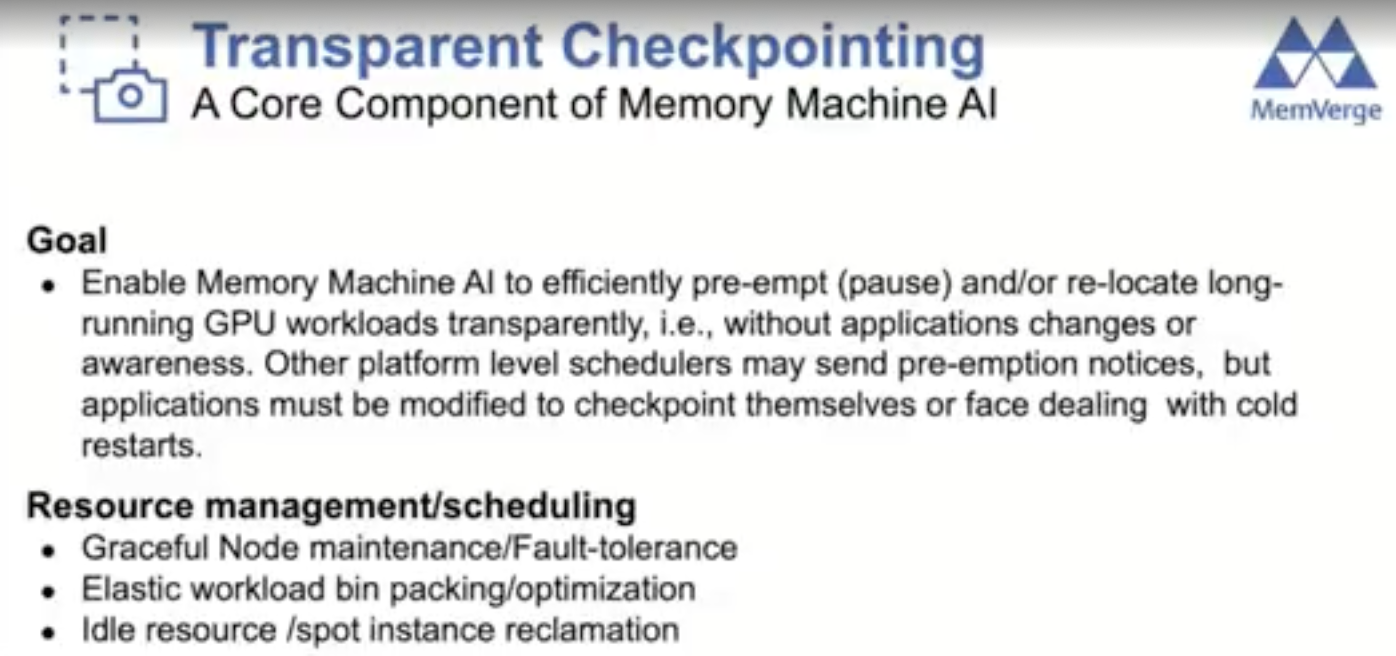

Steve Scargall made the case for “GPU Surfing” to get from where you happen to be to where you should be without having to be aware of the infrastructure implications. The goal is to appeal to those using HPC (High Performance Compute) / slurm as well as those using k8s (Kubernetes).

GPU Sharing Options

For example, if you are doing pytorch checkpoints, you can move from a like to like (from one H100 enabled host to another H100 enabled host). By saving state from memory from Host A to disk then restoring into memory on Host B, you are able to take advantage of flexible GPU usage for the workload using priority and preemption.

MemVerge Borrowing a GPU

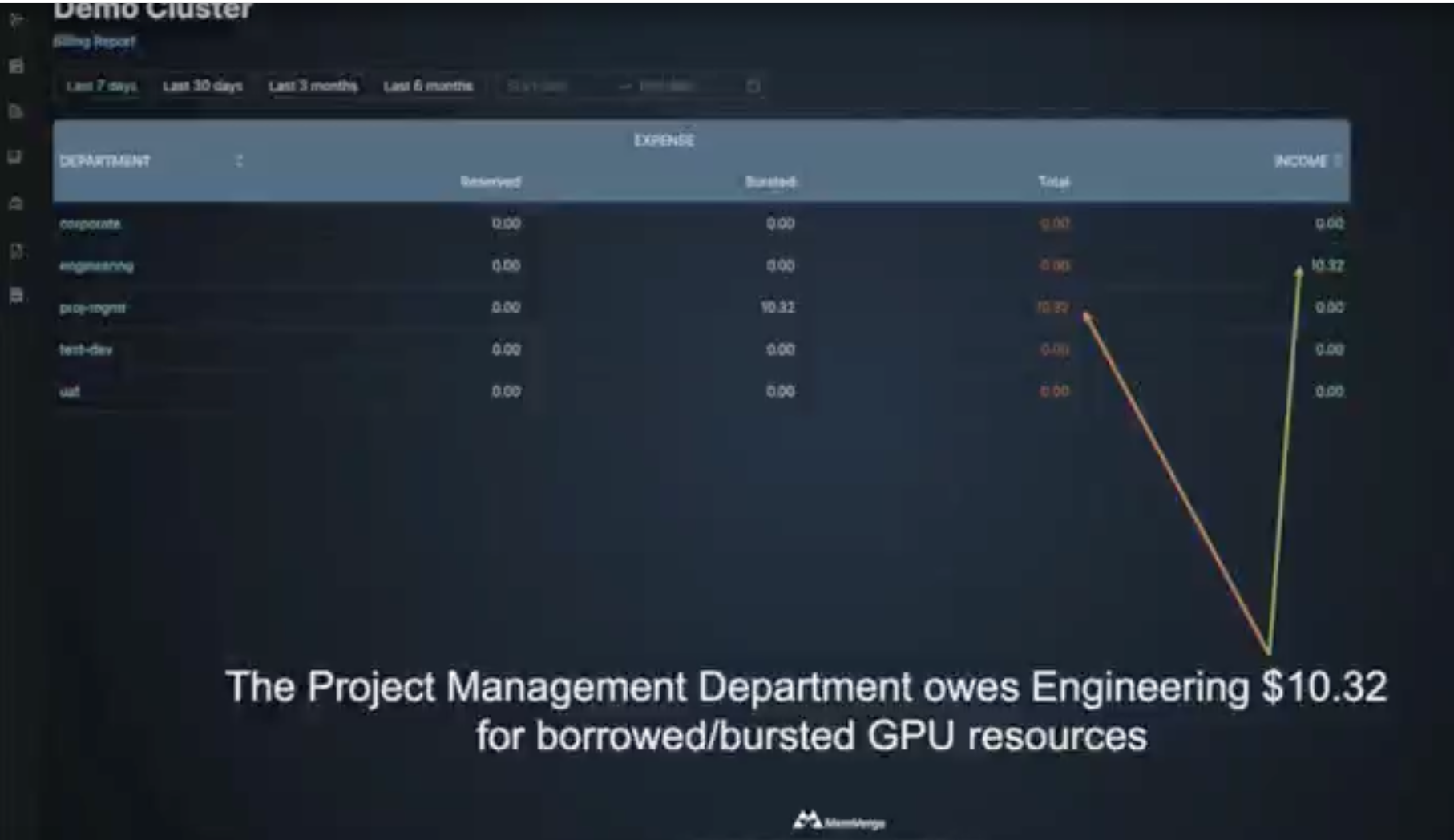

Project levels are given a priority with resources and node groups. These can be weighted to prefer A100, H100, etc. as well as billing of borrowed / bursting capabilities to permit inter-departmental cross charges from an existing GPU allocation.

I would gladly pay you Tuesday for a GPU today

Bernie Wu covered the details of transparent checkpointing and the innovations that MemVerge has brought to market and where they are headed next.

MemVerge Transparent Checkpointing

MemVerge Innovation: Memory Snapshotting / Checkpointing

Checkpointing State of the Union in 2025

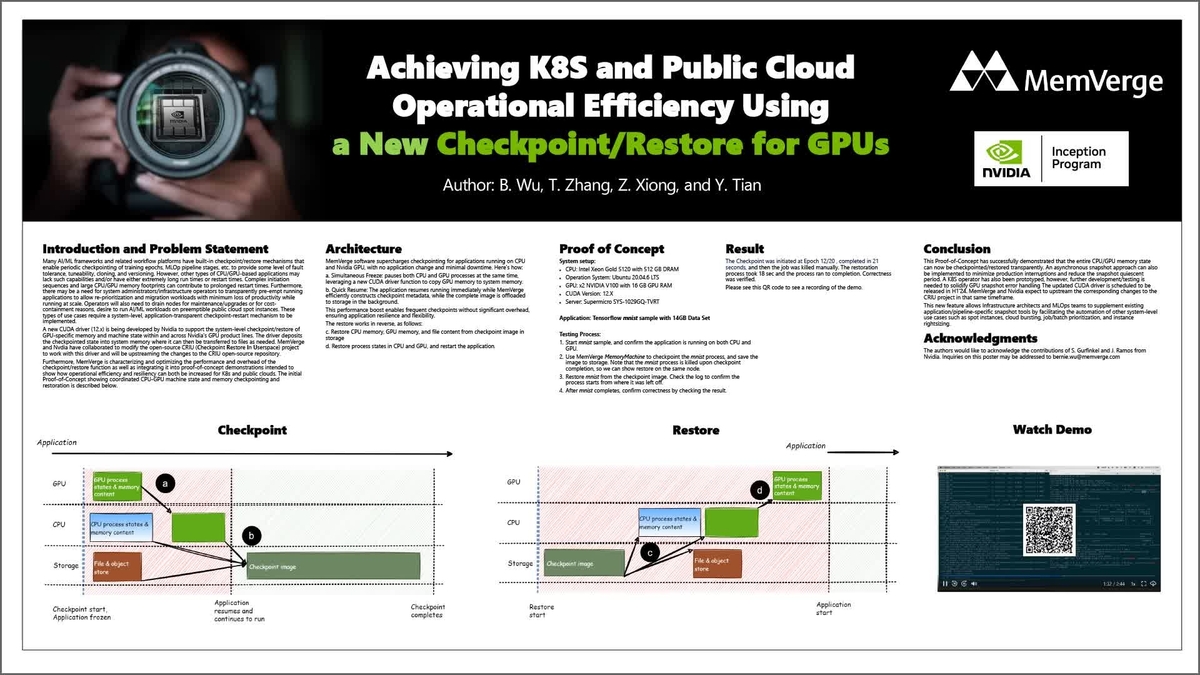

Achieving K8S and Public Cloud Operational Efficiency using a New Checkpoint/Restart Feature for GPUs

MemVerge Additional Use Cases

Steve Yatko, CEO and Founder of Oktay Technology, joined Charles Fan to share his experiences and expertise with low latency trading at Credit Suisse as well as his boutique advisory practices for Enterprise and government clients on journeys to unlock Enterprise class AI systems. Primarily, CEOs are driving for a realization of the current day applications for real P&L impact from the technologies pioneered in low latency trading systems with the promise of Enterprise class AI systems.

Now, extracting value from the ability to rent/lease and put AI projects on ice is where MemVerge is making impact on the economics that can be driven from insights in the mobilization of resources that previously were hard pinned per project. In other words, MemVerge allows companies to adopted a shared services and shared infrastructure model for AI workloads by enabling give back not just show back or charge back. Resource loaning is an enabler of unblocking innovation from teams that would otherwise be resource constrained.

MemVerge Call To Action

About the Author

Jay Cuthrell

Chief Product Officer, NexusTek

Jay Cuthrell is a seasoned technology executive with extensive experience in driving innovation in IT, hybrid cloud, and multicloud solutions. As Chief Product Officer at NexusTek, he leads efforts in product strategy and marketing, building on a career that includes key leadership roles at IBM, Dell Technologies, and Faction, where he advanced AI/ML, platform engineering, and enterprise data services.